[latexpage]

I’m writing a little software rasteriser which lets you apply an arbitrary transformation to a 2D image. This includes both translation, rotation, scaling and shearing (the affine transformations) as well as full perspective mapping.

There are a few tricks to do quick affine transformations which I won’t go into here; they’re pretty easy to find, online and in books. Essentially, you exploit the fact that opposite sides are parallel in the quadrilateral (or quad) that defines the boundaries of the transformed image.

What is harder to find is dealing with perspective mapping; at least, in a 2D context.

The mathematics of perspective

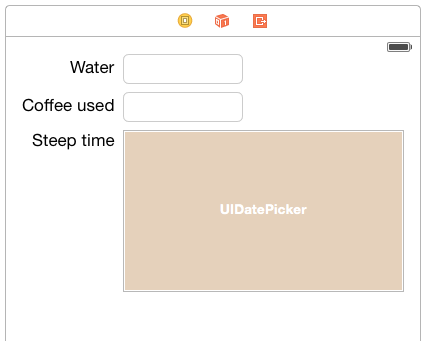

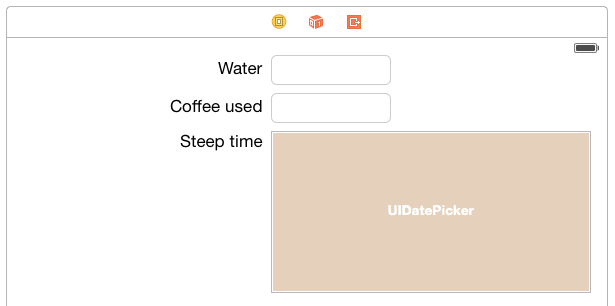

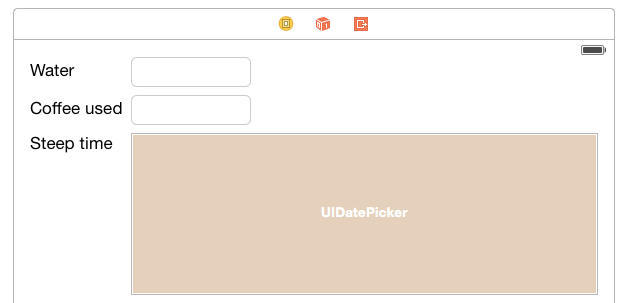

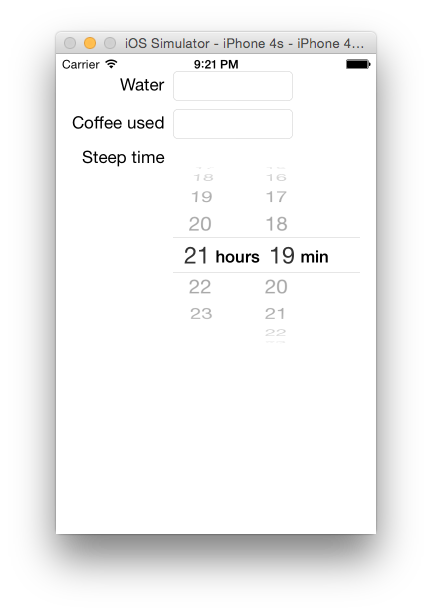

A perspective-mapped rectangle has one or two vanishing points; the point at which opposite sides of the rectangle meet. Objects which are regularly spaced apart will appear closer together the further away they are, such as the sleepers on a railway track:

Source: 1

The further back you go, the smaller everything gets. More specifically, the apparent size of an object is inversely proportional to its distance, reaching its limit at the vanishing point where everything is infinitely small. Obviously we can’t just linearly interpolate between the corners of our quad, like we can with an affine transformation; we need to include the depth information in our calculation. The interpolation equation (shamelessly lifted from Wikipedia) :

$u^{}_{\alpha}= \frac{ (1 – \alpha ) \frac{ u_0 }{ z_0 } + \alpha \frac{ u_1 }{ z_1 } }{ (1 – \alpha ) \frac{ 1 }{ z_0 } + \alpha \frac{ 1 }{ z_1 } }$

where $ 0 \le \alpha \le 1$ with endpoints specified by $u_0$ and $u_1$.

Extruding flatland at an angle

So that’s great, but how exactly do you get that depth variable $ z $ from a 2D quad? Consider the above equation. When $z_{{0,1}} = 1$ it is equivalent to linear interpolation. We know that the vanishing point is asymptotic and that the apparent size is inversely proportional to distance (or depth) – the latter is accounted for in the interpolation equation, so we need only select appropriate reference points.

Setting $ z_v = 0 $ to represent the vanishing point will give us an asymptote via division by zero. Setting $ z_0 = 1 $ will leave the point on the quad furthest from the vanishing point unchanged; now all we need is to find the depth of the point closer to the vanishing point $ z_1 $ where $ z_v \leq z_1 \leq z_0 $.

Cross products and angry merchandise

We can find out if there is a vanishing point by using the vector cross-product. If you’re familiar with the vector cross-product, feel free to skip a few paragraphs. Otherwise, here is its definition:

$

\vec{u} \times \vec{v} = (u_y v_z – v_y u_z) \mathbf{i} + (u_z v_x – v_z u_x) \mathbf{j} + (u_x v_y – v_x u_y) \mathbf{k}

$

This calculates the vector perpendicular to both $ \vec{u}$ and $ \vec{v}$ in three dimensional space. You will note that, in two dimensions, the z component is always zero, leaving us with the simplified equation

$ \vec{u} \times \vec{v} = (u_x v_y – v_x u_y) \mathbf{k} $

which means it is also the magnitude of that vector. It can be considered a measure of ‘perpendicularness’, as is apparent when taking the cross-product of the unit vectors: $ \mathbf{i} \times \mathbf{j} = 1$ and $ \mathbf{i} \times \mathbf{i} = 0$.

Diversion over. Take the vectors of two opposite sides of our perspective-mapping quad:

\begin{eqnarray*}

\vec{AB} & =& (b_x – a_x, b_y – a_y) \\

\vec{DC} & =& (c_x – d_x, c_y – d_y) \\

\vec{OA} & =& (a_x, a_y) \\

\vec{OD} & =& (d_x, d_y)

\end{eqnarray*}

where $ \vec{OA}, \vec{OD} $ is the vector from the origin to points A and D, respectively. If the cross-product $ \vec{AB} \times \vec{DC} = 0 $ then the lines are parallel and there is no vanishing point; otherwise we may find the intersection by calculating the point at which the two line equations are equal:

$ \vec{OA} + \gamma \vec{AB} = \vec{OD} + \delta \vec{DC} $

Cross both sides by $ \vec{DC} $:

\begin{eqnarray*}

(\vec{OA} + \gamma \vec{AB}) \times \vec{DC} & =& (\vec{OD} + \delta \vec{DC}) \times \vec{DC} \\

\vec{OA} \times \vec{DC} + \gamma \vec{AB} \times \vec{DC} & =& \vec{OD} \times \vec{DC} + \delta \vec{DC} \times \vec{DC} \\

\gamma & =& \frac{(\vec{OD} – \vec{OA}) \times \vec{DC}} {\vec{AB} \times \vec{DC}}

\end{eqnarray*}

Making use of the cross-product identity $ \vec{DC} \times \vec{DC} = 0 $.

Now we have our value for $ \gamma $ we may substitute it into the first line equation to find our intersection point, aka. the vanishing point:

$

(v_x, v_y) = \vec{OA} + \vec{AB} \frac{(\vec{OA} – \vec{OD}) \times \vec{DC}} {\vec{AB} \times \vec{DC}}

$

Note that if $ \gamma $ is negative then the intersection lies in the direction opposite $ \vec{AB} $, making B the farthest point from the vanishing point and therefore the nearest point to us.

The depth equation

Now we may calculate the depth of the farthest point $ f $ by linearly interpolating between the nearest point to us (A or B as found in the last paragraph; referred to in the equations as $ n $) and our vanishing point $ v $, and applying our depth formula. Remember that $ z_v = 0, z_0 = 1$ .

\begin{eqnarray*}

f & = z_1 n + (1 – z_1) v \\

& = v + z_1 (n – v) \\

z_1 & = \frac{f-v}{n-v}

\end{eqnarray*}

Tying it all together

With two-point perspective, the depth is the same along each parallel edge, with the longest at $ z_0 $ and the other $ z_1 $. However, three-point perspective places each corner at a different depth. We calculate the depth of the two points on the same line as the nearest point, and then calculate the final point’s depth as the product of the two adjacent to it. (Two-point perspective is a special case of this method – can you see why?)

Luckily for us, depth along these lines is a linear function – so we now have everything we need to map perspective. First, find the line upon which our u, v coordinates lie by interpolating by $latex \alpha $ on opposite sides of the quad.

\begin{eqnarray*}

\vec{AB_{\alpha}} &=& \frac{ (1 – \alpha ) \frac{ \vec{A} }{ z_A } + \alpha \frac{ \vec{B} }{ z_B } }{ (1 – \alpha ) \frac{ 1 }{ z_A } + \alpha \frac{ 1 }{ z_C } } \\

\vec{DC_{\alpha}} &=& \frac{ (1 – \alpha ) \frac{ \vec{D} }{ z_D } + \alpha \frac{ \vec{C} }{ z_C } }{ (1 – \alpha ) \frac{ 1 }{ z_D } + \alpha \frac{ 1 }{ z_B } } \\

z_{AB} &=& ( 1 – \alpha ) z_A + \alpha z_B \\

z_{DC} &=& ( 1 – \alpha ) z_D + \alpha z_C

\end{eqnarray*}

Now we can calculate u, v by interpolating along the line between those two points.

$ \vec{u_{\alpha}v_{\beta}} = \frac{ (1 – \beta ) \frac{ \vec{AB_\alpha} }{ z_{AB} } + \beta \frac{ \vec{DC_\alpha} }{ z_{DC} } }{ (1 – \beta ) \frac{ 1 }{ z_{AB} } + \beta \frac{ 1 }{ z_{DC} } }$

This just leaves one part left: how do we use these coordinates to render our image? And how do we actually render the image efficiently? This will be revealed in the next part coming soon…